VerneMQ and TCP buffers

TCP buffers are probably not the main and first thing you think about when you start working with VerneMQ.

But, oh my, are they essential!

MQTT is a TCP based protocol, and VerneMQ is TCP/TLS server-based software. Knowing your ways around TCP stuff can make all the difference between a smooth messaging system and a stuttering or overloaded system. So we'd like to make sure here that you know how you can configure at least the TCP buffer sizes, one of the most critical values to choose correctly.

Now, I can't even name all those fancy TCP issues that can happen. Bufferbloat, problems with the Nagle algorithm, you name it.

Real experts are making a living on this (thank you, folks!).

So let's not talk about the issues, but rather about knowing what you actually can configure and what not.

We have a couple of notes in our famous (har har) "Not A Tuning Guide". And you can find a couple of additional remarks in "A Typical VerneMQ deployment".

We describe how VerneMQ calculates TCP buffer sizes from OS settings, and how much RAM VerneMQ ends up using per connection. You can, therefore, control TCP buffer settings indirectly by changing OS settings, or you can control them directly by overriding the OS settings and manually configure buffer sizes from within VerneMQ. The rule is that the more bandwidth or, the lower latency required, the larger the TCP buffer sizes should be. We also show that TCP buffer sizes are one of the first points to consider when estimating RAM usage, especially for many connections.

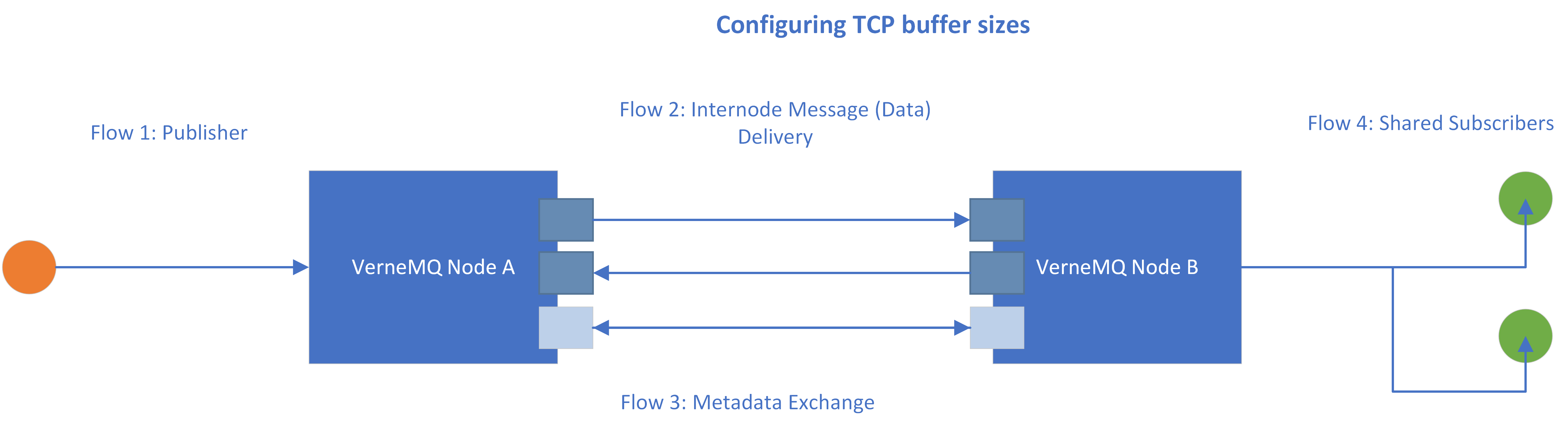

But let's look at the overall picture here, for a two-node VerneMQ example cluster.

You can see four flows: a Publisher Flow, an inter-node message exchange flow, a metadata exchange flow, and at the opposite end, a Subscriber Flow. Let's look at the configuration options you have for each flow.

Flow 1: Publishers

To take direct control over the buffer sizes here, you configure the MQTT/MQTTS listener in the vernemq.conf file as follows:

listener.tcp.my_publisher_listener.buffer_sizes=4096,16384,32768

Those 3 values represent OS level TCP sndbuf, OS-level TCP recbuf, and an additional VerneMQ level buffer that is used to handle the TCP connection. (only the VerneMQ level buffer shows up as using RAM within VerneMQ metrics).

Flow 2: Internode Messages

A VerneMQ node listens on a vmq_listener for connections from other nodes to deliver messages. Those connections are a one-way transport. For this reason, at least 2 TCP connections exist between 2 clustered VerneMQ nodes for data delivery.

Currently, those listeners have buffers derived from TCP OS config. (Specifically, VerneMQ sets those buffers to the max value found in OS).

We have an open feature request to make those buffers directly configurable too, but we haven't seen too many issues related to those buffers.

Flow 3: Metadata

Cluster state replication (SWC) uses the Erlang Distribution layer. You can set the Erlang side buffer by using erlang.distribution_buffer_size = 32MB in the vernemq.conf file. The default is 1024 KB (hint: that's quite low).

Flow 4: Subscribers

Here's where things get interesting.

Message Consumers (subscribers) connect to an MQTT listener just like Publishers do. But it doesn't necessarily have to be the same listener!

If your message distribution pattern can benefit from different TCP buffer sizes publisher side and consumer side, MQTT listeners are where to configure for that. Especially interesting when you have a fan-in scenario, with many devices sending small messages to a few shared subscribers or just one consumer. You'd want small TCP buffers at the incoming end, but bigger ones at the outgoing end.

So, again, configure the MQTT listener in the vernemq.conf file as follows:

listener.tcp.my_subscriber_listener.buffer_sizes=4096,16384, 32768

Make sure you give the listener another name ("my_subscriber_listener" in the above example).

Those 3 values override and configure OS level TCP sndbuf, OS level TCP recbuf, VerneMQ level buffer (only the VerneMQ level buffer shows up as using RAM within VerneMQ metrics)

That's it!

You now know how to control buffer sizes in a VerneMQ cluster directly, instead of mostly leaving it to the Operating System.

General Recommendation

Have you noticed that we always want you to think for yourself instead of copy/pasting configurations? :)

No two messaging scenarios are equal in our experience.

Think, try, experiment!

What we can say is that having a big range in your OS level TCP read and write buffers gives you the most flexibility. Doing this sets the limits for your manual configurations. VerneMQ then takes the maximum values for automatically configured buffers, while you still can lower buffer sizes within the VerneMQ config file.

You may have spotted another "buffer" in the vernemq.conf file for which you can configure the size. The outgoing_clustering_buffer_size defaults to 10KB. Do not confuse this with any TCP buffers. This buffer is used as an internal queue to buffer inter-node message delivery in case the remote node is down/unreachable.

Also, note that the metrics related to cluster bytes (sent, received, dropped), like cluster.bytes.sent only record traffic for vmq_listeners, that is Flow 2 (message delivery). There are currently no exposed metrics for the traffic for the SWC layer.

Let us know if this answered more questions than it opened others. :)